A monopoly in a global capitalist system is an oxymoron. Still, when it comes to hardware designed for AI, Nvidia’s GPUs reign supreme and have, in fact, become the industry standard.

In the past, semi-monopolies within a free market have never lasted long. Sooner or later, the market rebalanced itself, sometimes even drastically for the leaders of the moment, as in the case of Cisco Systems’ collapse — a hardware sector leader in the early 2000s that lost a large part of its stock value when other companies began selling the same products.

Nvidia’s financial situation appears rosy, with 36% annual growth and exceptional profits reported in November 2025: $57.01 billion (against the estimated $54.2B), EPS $1.30 (vs. $1.25 estimated), data center revenue growth of 112% YoY.

As in the case of Cisco Systems, the first factor of concern for NVDA stock is the competition from other companies in building chips that can surpass its GPUs in terms of cost and efficiency. The so-called hyperscalers have been working on developing in-house chips for some time: Google with its TPUs, Meta with MTIA, and Amazon with Trainium chips.

An extraordinary peculiarity of this context is that Nvidia’s main competitors are also its largest customers. In fact, a concern of no minor importance for the stock is the extremely high customer concentration. The vast majority of Nvidia chip sales go to the same hyperscalers (Microsoft, Meta, Amazon, Google, and Oracle) that are spending billions of dollars to develop technologies that can outclass it. If these companies slowed their purchases, Nvidia would suffer immediate impacts.

Supporting these concerns, one need only observe the recent dynamics of Nvidia stock prices. Following the release of record profits, the stock rose 5% in after-market trading, only to crash 3.2% the following day. The catalyst for this retreat was Google’s release of its new LLM model, Gemini 3. As can be seen on the heatmap, the competition is intensifying. At the moment, it ranks as one of the most performant models, significantly surpassing the competition, and most importantly, it was trained entirely using Tensor Processing Units (TPUs), Google’s proprietary chips, instead of Nvidia GPUs.

Google’s TPUs, designed and developed specifically for AI model training, are more cost-effective and consume less power. In contrast, Nvidia GPUs were initially created for gaming and only became the AI industry standard later on. Recent statements by Nvidia CEO Huang about the alleged difficulty companies would encounter in switching to a platform other than his own are a clear assertion of the will to maintain a monopolistic position, as well as an underlying and growing concern about losing it.

The lower inference costs of TPUs compared to GPUs (at least four times, according to internal Google reports) and 67% lower energy expenditure have already created a crack within Nvidia’s monopoly, with some deals discussed in late November.

Anthropic reached an agreement for the purchase of an additional 1 million TPUs, with an estimated value of $42 billion. Meanwhile, Meta plans to rent TPUs through Google Cloud in 2026 and install on-premise TPU pods in its data centers by 2027. This is despite Meta remaining Nvidia’s largest customer, with $70-72 billion in capital expenditures planned for 2025.

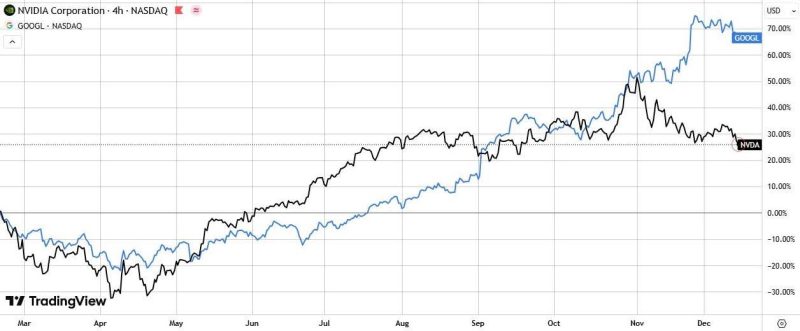

Placing Nvidia’s chart alongside Google’s, one can see that performance has been relatively similar from the lows touched in April, while diverging significantly from late November onward.

But hardware isn’t everything. Nvidia maintains an enormous advantage in the software ecosystem. CUDA, cuDNN, and TensorRT constitute the de facto substrate for AI development and deployment at scale. Switching from CUDA to XLA (the TPU compiler) requires code rewriting or retuning, managing different performance bottlenecks, and sacrificing multi-cloud portability. Nvidia operates on AWS, Azure, and Google Cloud and uses different frameworks (PyTorch, MXNet, Keras). At the same time, TPUs are exclusive to Google Cloud, meaning that developers would remain tied to the company’s policies without any flexibility. If Google suddenly decides to raise prices, they would be forced to rewrite everything. This, for now, is the primary reason why many companies still hesitate to invest heavily in TPUs despite their lower costs.

Conclusions:

The performance divergence between Nvidia and Google in 2025 reflects a recalibration of the AI market. Nvidia maintains undisputed leadership in training diverse models and software flexibility, but its dominance in AI accelerators is under growing pressure. Google’s TPUs have demonstrated technical and economic superiority in large-scale inference — the segment that will dominate AI’s future.

The AI market is evolving from a GPU monopoly toward a heterogeneous ecosystem. For analysts and investors, it will be essential to evaluate the context daily, as it is in a state of continuous evolution. Nvidia remains a dominant player, but the era of unchallenged dominance is waning.

The real question is no longer “GPUs or TPUs?” but “which mix optimizes performance, cost, and flexibility for each specific workload?” In this new paradigm, both architectures will play critical roles. Still, power dynamics — and stock valuations — will continue to reflect who captures the value of the rapidly expanding inference economy.